The Most Dangerous Developer Isn’t a Hacker. It’s You.

AI made coding easy. But without experience, easy becomes risky fast.

Today’s Code & Capital is brought to you by… AI Founders Club

AI builders aren’t waiting around, they’re building. If you’re trying to turn an AI idea into something real (and revenue-generating), this is the tribe to be around.

AI Founders Club is a private community of builders (code and no-code) learning how to launch, scale, and monetize AI products fast. You get playbooks, toolkits, actual examples from real startups, and 7-day risk-free access to test it all out.

You also get exclusive discounts on AI tools (Composio alone saves you $274), plus a behind-the-scenes look at what’s actually working.

If you're serious about building in AI, stop lurking and start shipping.

Hi friends 👋,

I’ve been coding for over 12 years. Back in the Rails days, I built tools to generate boilerplates and speed things up. So code generation isn’t new to me.

I’m optimistic about the future of vibecoding. But that doesn’t mean we can ignore the potential risk of skipping the hard parts developers used to handle.

I got the idea for this post after seeing someone who didn’t know how to code launch a successful AI-built app and accidentally leak their API keys in production.

I’m not saying that never happens to experienced developers. But it made me pause.

Because the person who built it didn’t know how to code, they wouldn’t know what to prompt to fix the issue effectively. That’s what stuck with me.

It points to something bigger. A whole wave of people building software without understanding how software actually works.

And since more people are asking whether developers are still necessary, this post is my answer.

AI can build a product, but not all products are created equal.

If you're trusting AI to build your app, this will help you understand when working with an experienced developer becomes the difference between something that works and something that lasts.

Let’s dive in.

The Prompt-and-Pray Era

“AI will not replace developers. But it will make bad developers dangerous.”

Somewhere right now, a 15–year-old is launching an AI-powered SaaS app using nothing but prompts and good vibe.

No Git setup to manage version control.

No database security to prevent leaks or attacks.

Hardcoded environment variables that hand hackers the keys to your third-party services.

Just sheer will and Cursor.

We’ve officially entered the vibecoder era.

It’s a world where anyone with a prompt and a dream can write code, ship apps, and accidentally deploy SQL injection vulnerabilities at scale.

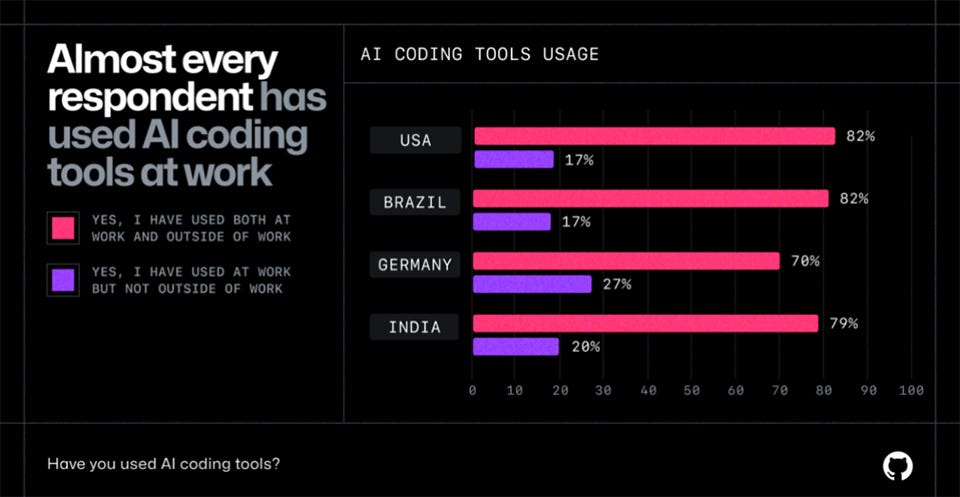

From 2024 to 2025, AI-assisted coding took over North America like Spotify wrapped took over your December.

Nearly every developer has tried it (97%, per GitHub). And not just developers.

Everyone.

Welcome to the paradox: AI tools are accelerating productivity across the stack but also making it easier than ever to build broken things fast.

Productivity Is Up. So Are the Unknown Unknowns.

Let’s start with the good news.

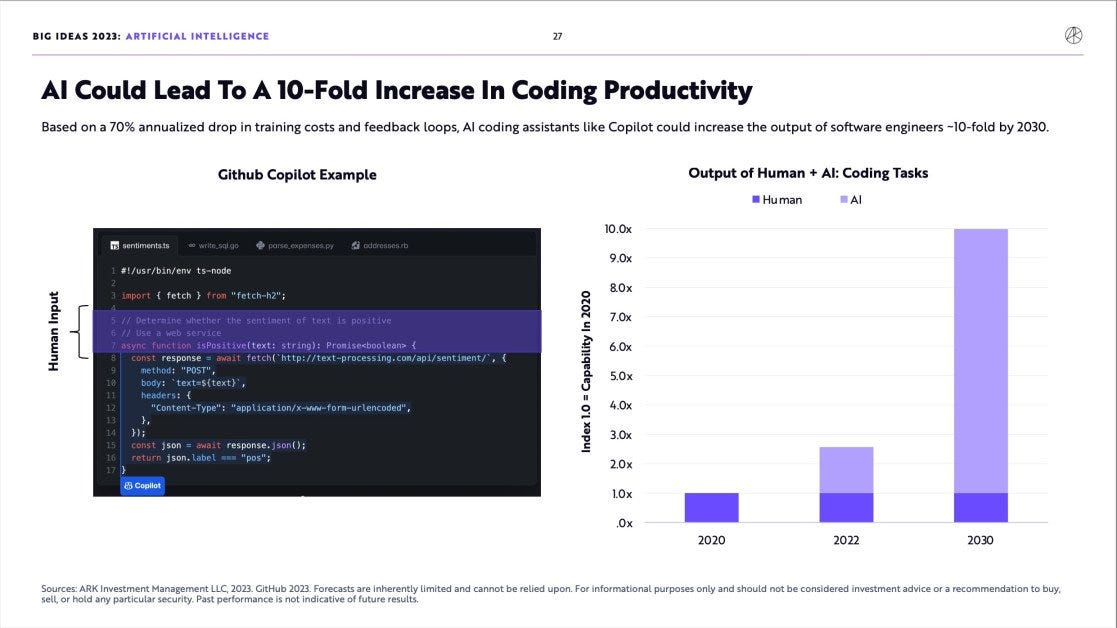

AI coding tools are wildly effective.

Developers using GitHub Copilot, Claude, and ChatGPT report dramatic gains:

97% have used an AI assistant

88% say it makes them more productive

87% say it saves mental energy

90% say it makes coding more enjoyable

These aren’t soft metrics. It also show up in retention, delivery speed, and developer happiness.

And I can attest to that. I’m genuinely less frustrated when I “code” now.

More momentum. Less mental overhead. It's flow state on demand.

But there’s a catch.

You can feel more productive and still be making mistakes.

Because AI isn’t just speeding up code. It’s speeding up everything: good ideas, bad ideas, broken ideas wrapped in nice syntax.

And here’s where things get risky.

Developers love AI. But most don’t fully trust it.

31% say they don’t trust AI-generated code

45% say AI struggles with complex tasks

It’s not surprising.

AI is the perfect pair programmer. It’s relentless, untiring, but just a little too confident.

Especially when it’s wrong.

It’ll hallucinate logic, introduce subtle bugs, and smile while doing it.

And yet… AI’s productivity boost didn’t stop with developers.

It spread.

To indie hackers.

To founders.

To students.

To anyone with a keyboard and a dream.

This is where the real shift began.

Because it’s not just developers using AI anymore.

What Is a Vibecoder?

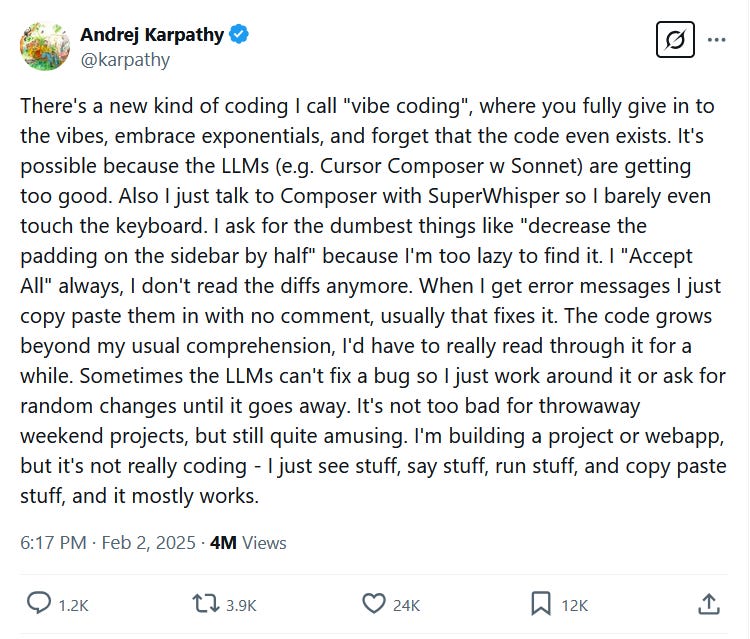

A vibecoder is anyone who uses prompts to build software.

That includes non-developers. It also includes people like me.

I’ve been writing code for years but these days, 99% of what I do starts with vibe coding.

The difference is: I know how to check what the AI gives me.

But not everyone does.

The type of vibecoder we’re talking about here is the one with zero technical background.

They don’t know about software architecture.

They’ve never used git commit.

But they’ve built a working app that talks to Stripe, runs on Vercel, and sends you birthday reminders.

It’s impressive. And terrifying.

Because AI will build exactly what you ask for even if it’s wrong.

It looks like a polished product until you realize every user shares the same password.

A vibecoder might ship something that works.

But without experience, they can’t evaluate tradeoffs, anticipate edge cases, or recognize when their app is a security liability.

The Security Hole You Didn’t See Coming

The Most Dangerous Dev Isn’t a Hacker. It’s a Vibecoder with a Prompt.

In a study of AI-generated code:

40% of SQL queries were vulnerable to injection attacks

25% exposed XSS risks

Many vibecoders don’t know what an env file is.

They hardcode API keys.

They ship debug flags to prod.

They ship like pros until something breaks and no one knows why.

Because even if the code runs, getting it live is where most vibecoders hit the wall.

And Worse: deployment still sucks.

CI/CD? Docker? DNS? Secrets management? These are tasks that AI might help with… but rarely solves end-to-end.

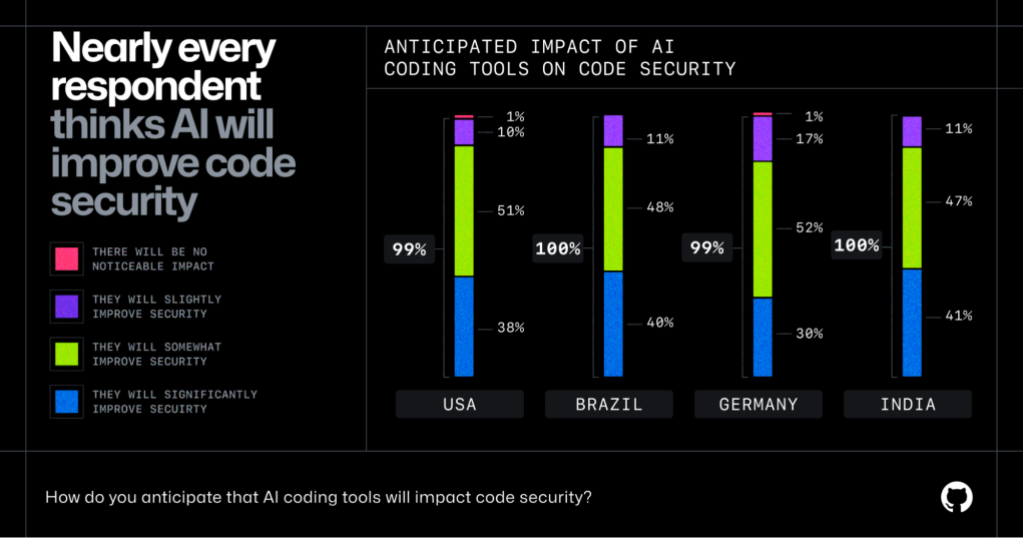

Yes, platforms like Supabase now run real-time security checks.

Bolt and Lovable offer security analysis. GitHub auto-scans for secrets.

But unless the builder understands the implications, these tools are just background noise.

You can’t fix a problem you don’t know exists.

But here’s the truth:

Most of those problems start before a single line of code is written.

Because the real root of all this?

It’s not just what AI generates.

It’s what people ask it to build.

Prompt Engineering Is a Skill. And Most People Suck at It.

So what’s driving all this?

It’s not just that AI lets anyone code.

It’s that most people don’t know how to talk to it.

Because underneath every dangerous AI-generated app isn’t just bad code, it’s a bad prompt.

Everyone talks about prompt engineering like it’s just “English, but fancier.”

Wrong.

Prompt engineering is about mental models.

It’s about knowing what you’re trying to build, what decisions need to be made, and how to instruct the AI to do it right.

Example: “Build me a login page” is not the same as:

“You are a senior software engineer.

Build a login flow with token-based auth using JWT, include CSRF protection, and store tokens securely with refresh logic.”

One gets you a button that looks good.

The other gets you something that doesn’t end up on r/cybersecurity subreddit.

This is the hidden tax of vibecoding: if you don’t understand the problem, you can’t prompt your way to the right solution.

AI will cheerfully walk you off a cliff with a perfectly formatted commit message.

“Added infinite loop in production. Looks good!”

When AI Codes, Humans Still Make the Mistakes

And this brings us to the core truth:

Even the smartest AI in the world can’t save you from your own bad instructions.

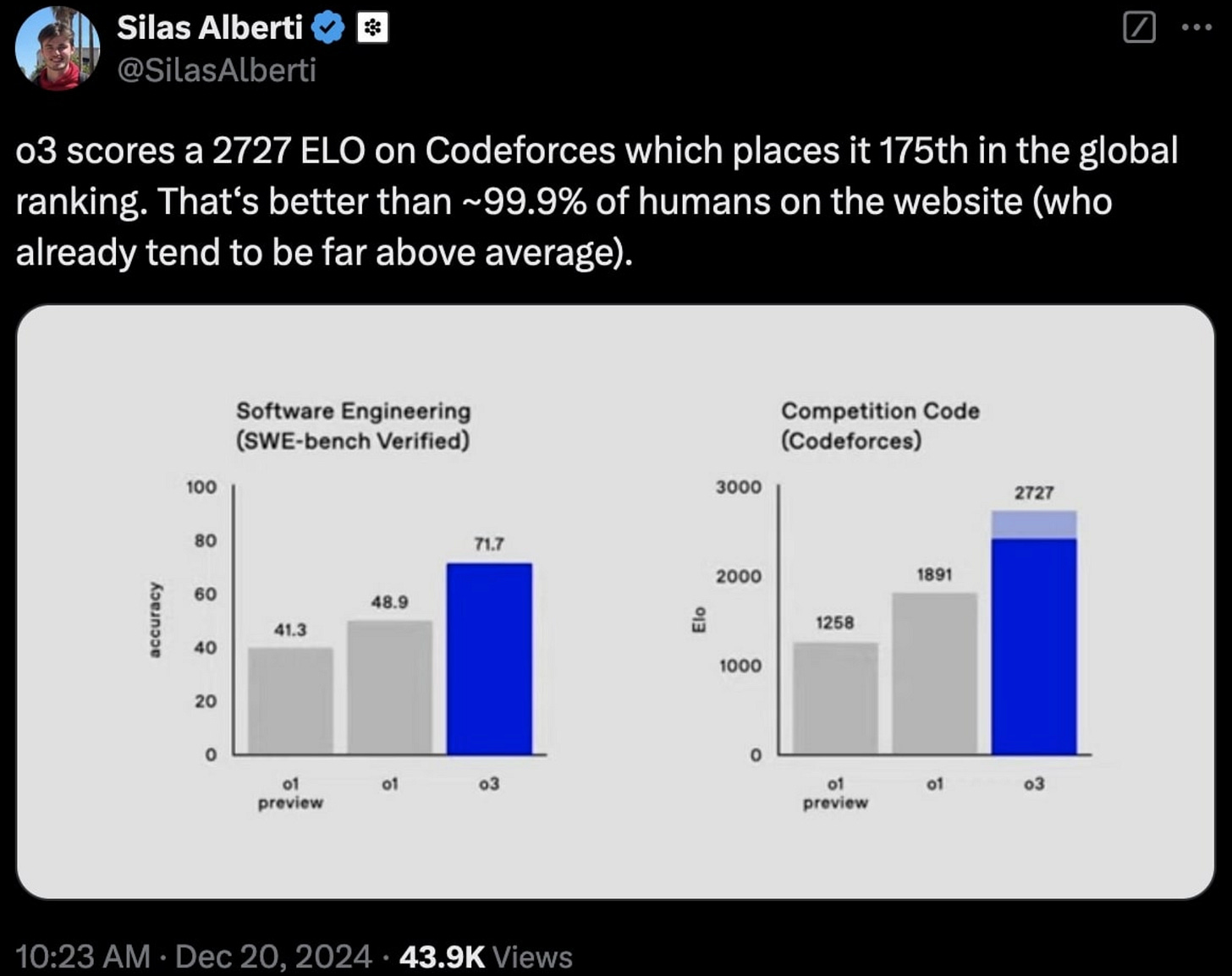

Sam Altman recently noted that OpenAI’s o3 model now ranks among the top 175 coders globally.

AI is already outperforming humans in raw code execution.

AI is fast, confident, and obedient.

Give it a flawed prompt, and it will execute perfectly on your bad idea.

But It often won't ask questions. It won't second-guess you.

That’s the real risk: not what AI does, but what it doesn’t push back on.

It doesn’t pause to ask: Is this safe? Is this smart? Is this even the right thing to build?

It just ships.

And when that power lands in the hands of someone who doesn’t see the tradeoffs or worse, doesn’t know there are any, that’s when things break..

Even if AI becomes the best developer you’ll ever hire, the pilot still defines the outcome.

Yes, AI is getting scary good at simulating judgment. But it still operates inside the box you give it.

If your prompt is built on flawed assumptions, it’s still garbage in → confident garbage out.

So the problem isn’t the output. It’s the input.

If you’re vague, it’s vague.

If you’re wrong, it’s wrong-er.

If you assume it knows better, you’ll get burned.

Because at the end of the day, AI will build the tower you ask for.

It’s on you to make sure it doesn’t collapse.

The #1 AI coder in the world will still build the wrong thing if given a broken prompt.

It won’t stop you from driving into a wall.

It’ll just make the wall look prettier on the way down.

So let’s stop asking:

“Can AI replace developers?”

And start asking:

“Can non-developers develop the judgment to guide AI?”

Because if they can’t, we’re not building apps.

We’re building shimmering towers with cracked foundations.

And that’s a vibe no one wants.